Nonresponse bias poses a frequent challenge that support agents must address.

In the statistical literature, nonresponse bias has been a concern of researchers who used postal questionnaires since 1838. Today, with a modern approach to survey clients, we still face the same problems — different types of survey bias.

Luckily, we already know how to deal with them. Let’s look at how to avoid the most common mistakes in customer surveys, which lead to nonresponse bias.

- What is nonresponse bias as a termine?

- Nonresponse bias vs undercoverage bias

- How can you understand that the survey had a nonresponse bias issue?

- Why is it crucial to avoid nonresponse bias in our surveys?

- Why nonresponse bias could occur during the surveys?

- How to avoid non response bias

- How to detect nonresponse bias in the existing survey and handle with it

- How to follow up with non-responders?

What is nonresponse bias as a termine?

Let’s first understand what nonresponse bias is before we explore ways to prevent errors in your survey. Nonresponse bias is a type of bias that can happen during survey research when the individuals chosen for the survey do not respond. This can lead to a significant difference in characteristics between the respondents and non-respondents.

Nonresponse bias is part of a list of some common types of sampling biases – errors that occur due to selecting the survey sample. This can include:

- Nonresponse bias: differences between respondents and non-respondents lead to a non-representative sample.

- Undercoverage bias: specific population segments are inadequately represented or excluded from the sample.

- Overcoverage bias: some groups are overrepresented in the sample.

Nonresponse bias vs undercoverage bias

While nonresponse and undercoverage bias can lead to unrepresentative samples, they stem from different parts of the survey process and require different approaches to detection and correction. Nonresponse bias deals with disparities between respondents and non-respondents within the selected sample, whereas undercoverage bias is related to the exclusion of certain segments of the population from the sampling process.

How can you understand that the survey had a nonresponse bias issue?

Remember, a low response rate doesn’t automatically mean you have a significant nonresponse bias.

Even with a high response rate of 80 percent, the information derived from the survey can be deceptive if the individuals who responded have distinctive characteristics or experiences, like race, ethnicity, or income level, or factors such as student placement or duration in special education, which significantly differ from those of the overall target population.

Let’s say a district survey showed that 35% of parents who responded have children with autism. However, in the same district, only 10% of students with disabilities have autism. This means that the voices of parents with autistic children are overrepresented, which affects the accuracy of the survey results.

Similarly, if only 10% of survey respondents are Hispanic students, but 45% of all students in the district are Hispanic, then the survey doesn’t accurately reflect the perspective of Hispanic students in the community. In both cases, the results cannot be generalized to the entire target population without potentially problematic statistical adjustments.

The key question is whether the non-respondents differ in meaningful ways from those who did respond. If they do, your results may be biased.

Why is it crucial to avoid nonresponse bias in our surveys?

This is an issue because if those who do not respond have different views or experiences than those who do, the survey results may not accurately represent the overall population that the survey is intended to measure. This is a problem with the generalizability and validity of the survey results.

For an example of nonresponse bias, let’s say you’re conducting a phone survey on political opinions. Suppose a significant proportion of individuals who don’t respond to your survey (maybe because they’re at work during your call times or they don’t have a landline) have political views that are different from those who do respond. In that case, your survey results will be skewed, as they won’t represent the diversity of political views in the overall population.

Why nonresponse bias could occur during the surveys?

It is several reasons why nonresponse bias occurs.

Survey length. If a survey is too long or complicated, customers may abandon it partway through. This could result in a nonresponse bias if the views of those who completed the survey are systematically different from those who abandoned it.

Poor timing. If the survey is sent at an inconvenient time, such as right after purchase or during a busy holiday season, customers might ignore it. The responses collected might only be from those who have more spare time or are particularly passionate, leading to bias.

Lack of incentives. If there’s no direct benefit to the customer for filling out the survey, they may simply choose not to respond. Those who do respond might have stronger opinions or more vested interest, which could skew results.

Limited accessibility. If the survey isn’t mobile-friendly or easy to use on all devices, you could miss out on responses from those who primarily use their phone or tablet for online shopping. Again, this could result in a bias if the opinions of these users differ from those using traditional computers.

Negative experience. Sometimes, people who have had a very negative (or sometimes a very positive) experience are more likely to complete a survey to express their dissatisfaction or satisfaction. This could potentially skew the results away from the opinions of the average customer.

Privacy concerns. Some customers may choose to refrain from participating in surveys due to concerns about their privacy or how their data will be used, which could lead to bias if these individuals’ views differ from those who are less concerned about these issues.

Examples of surveys which is lead to nonresponse bias

- A survey designed to capture the opinions of individuals involved in illicit activities or those within secluded groups would probably experience limited engagement from these demographics due to their reluctance to disclose their unlawful behaviors or divulge their knowledge. Consequently, the received responses may primarily originate from individuals who do not accurately depict the research’s intended focus group.

- A survey based on outdated information could be the reason for nonresponse bias. Suppose you plan to distribute a survey to attendees of an event you hosted two years ago. You have all their email addresses stored in your Customer Relationship Management system (CRM), but there has yet to be any updated information collected on them since that event. When you dispatch the survey, you observe very low delivery and open rates. It appears that these customers have updated their email addresses and no longer access the email accounts you have on record.

- Request for sensitive information absolutely will lead to nonresponse bias. Imagine you are operating a cybersecurity firm developing a novel email encryption method. You decide to issue a survey to understand your customers’ existing security systems, requesting them to share passwords for different accounts and explain their choice of these passwords. Unexpectedly, none of your customers participate in the survey. Moreover, a few of them even contact your customer service to flag your survey as a possible scam.

How to avoid non response bias

The common mistakes that lead to nonresponse bias in surveys

To minimize or anonresponse bias in surveys, you need to carefully consider the survey design, distribution, and follow-up strategies. Here are some tips:

- Clear and concise questions. Ensure that your survey questions are easy to understand and to the point. Avoid using jargon or complex language that might confuse respondents.

- Short surveys. Long surveys can deter people from participating in or completing the survey. Try to keep your survey as short as possible while still collecting the necessary information.

- Respect privacy. If your survey asks sensitive questions, make sure you communicate why you need the data and how you will protect the respondents’ privacy.

- Timely reminders. Send out reminders to people who still need to complete the survey. But avoid too many reminders, which can come across as spammy.

- User-friendly design. Make your survey accessible and easy to use across different devices, including smartphones, tablets, and computers.

- Incentives. Consider offering some form of incentive to motivate people to respond to the survey. This could be a discount, entry into a draw, or some other small reward.

- Good timing. Think about the most convenient time for respondents to answer your survey. For example, conducting a survey about tax preparation services just after tax season might get you a better response rate than doing it at another time of year.

- Test your survey. Conduct a small pilot test of your survey to ensure it’s working as intended. You can also gather feedback on the survey’s length and question clarity.

- Include a ‘Prefer not to answer’ option. For sensitive or personal questions, this option can allow respondents to skip a question they’re uncomfortable with without abandoning the entire survey.

- Use multiple contact methods. If possible, use multiple methods to reach out to respondents (email, phone, mail, etc.) to increase the chance that they’ll receive and respond to your survey.

Remember, while these strategies can help reduce the potential for nonresponse bias, it’s nearly impossible to eliminate it entirely. Therefore, when analyzing and interpreting your survey results, consider the potential impact of nonresponse bias and treat your findings accordingly.

Bonus part. Examples of improper questions that may be lead to nonresponse bias

Check your survey with this checklist to ensure that answers are appropriate and polite. Here are some examples of uncomfortable questions.

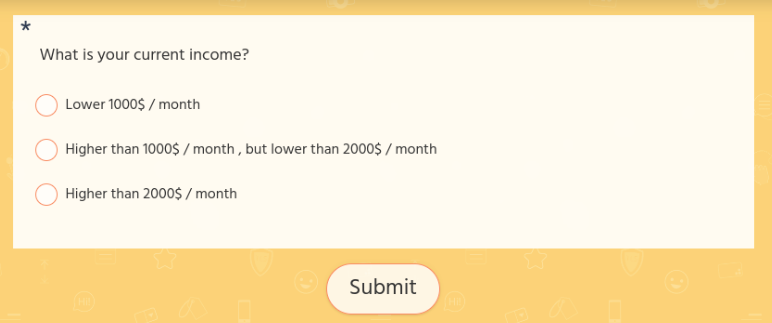

Personal or sensitive information

Questions asking for personal or sensitive information can often lead to nonresponse.

For example:

- ‘What is your current income?’

- ‘Have you ever been diagnosed with a mental illness?’

- ‘Have you ever been a victim of a crime?’

Complex or time-consuming questions

If a question requires a lot of thought or time to answer, people might just skip it.

For example:

- ‘Please list all the medications you are currently taking.’

- ‘Describe in detail what you like and dislike about our product.’

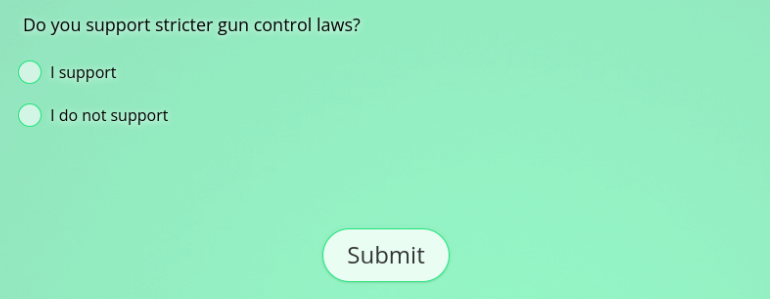

Controversial topics

Questions about hot-button or controversial issues can also lead to nonresponse.

- ‘What is your stance on abortion?’

- ‘Do you support stricter gun control laws?’

For example:

Poorly worded or confusing questions

If a question is confusing, uses jargon, or is ambiguous, people might not respond because they need help understanding what is being asked.

For example:

- ‘What is your opinion on the Smith-Johnson proposal?’ (without explaining what the proposal is)

- ‘Do you agree with the utilization of dihydrogen monoxide in food products?’ (a confusing way of asking if they agree with using water in food products)

Remember, the goal of survey design is to create questions that are clear, easy to answer, and respect respondents’ comfort and privacy. If respondents feel uncomfortable or confused, they may choose to refrain from responding at all, leading to nonresponse bias.

How to detect nonresponse bias in the existing survey and handle with it

Nonresponse in sampling, particularly in customer surveys, refers to the failure to obtain responses from a selected sample within a survey. This can happen for various reasons and often leads to biases in the collected data, affecting the validity and reliability of the survey results.

Calculating nonresponse bias can be complex, and the best method may depend on the specific situation. Here’s a simple hypothetical example for illustrative purposes:

- Variable of Interest: Income

- Respondents: Average income of $30,000

- Overall Sample: Average income of $35,000

- Difference: $5,000

This difference might lead to a nonresponse bias if income is relevant to the survey topic.

Detecting and avoiding nonresponse bias can be tricky. Still, there are some methods you can use to assess the likelihood and potential impact of this kind of bias in your survey results.

Start your day

with great

quality

content

Compare respondents to the target population

If you have demographic information or other data about the overall population you’re trying to survey, compare this to the same data for your respondents. If there are significant differences, this could suggest the presence of nonresponse bias.

For example, if your target population is evenly split between males and females, but 80% of your respondents are female, this could be a sign of nonresponse bias.

Wave analysis

Send out your survey in waves, and compare early respondents to late respondents. People who respond later often share characteristics with those who never respond. If you see significant differences between early and late respondents, it could be a sign of nonresponse bias.

Follow-up with non-respondents

If possible, try to follow up with a subset of non-respondents to understand why they didn’t respond. You can also try to get them to answer the survey. If their answers differ significantly from those who initially responded, you might have a nonresponse bias.

Analyze partial responses

If you have a large number of respondents who began but did not finish the survey, analyze the data you do have from these partial responses. If these partial respondents have different characteristics or gave different answers than those who completed the survey, this could indicate nonresponse bias.

Use external benchmarks

If there are external studies or benchmarks related to your survey topic, compare your results to these external data points. Significant differences may indicate nonresponse bias, although they could also reflect actual differences between your sample and the external population.

How to follow up with non-responders?

Follow-ups with nonrespondents can be crucial in increasing your overall response rate and minimizing nonresponse bias. Here are some strategies:

- Reminder emails or messages. A simple reminder can go a long way. Send a gentle nudge to those who still need to complete the survey, reminding them that their opinion is valuable and that the survey is still open. Be sure to refrain from bombarding them with reminders, as too many can come across as spam.

- Incentivize participation. If you haven’t already, consider offering a small incentive to complete the survey. This could be a discount, entry into a raffle, or early access to a new product or feature.

- Personalize your communication. Personalized messages can have a higher impact. If possible, use the respondent’s name and make the message relevant to them.

- Emphasize the survey’s purpose and importance. Let non-respondents know why their feedback is important and how it will be used. People are more likely to take time to respond if they know their feedback will have a real impact.

- Make it easy. Ensure your survey is user-friendly, quick, and easy to complete. If the initial nonresponse was due to the survey’s length or complexity, consider whether it can be shortened or simplified.

- Show appreciation. Express gratitude for the respondent’s time and effort, both in the follow-up and in the survey itself.

- Assure confidentiality. If your survey deals with sensitive issues, make sure to assure respondents that their responses are confidential and will be used responsibly.

Though, it’s essential to respect individuals’ choices not to participate. Persistent follow-ups can feel invasive and may harm your relationship with potential respondents. Therefore, it’s a good idea to give respondents an option to opt out of further communications about the survey.

Avoiding nonresponse bias is integral to the success of surveys, as it ensures that the collected data accurately reflects the views and experiences of the target population. Nonresponse bias, which occurs when the respondents differ significantly from those who choose not to respond, can skew the survey results and lead to misinterpretations, potentially jeopardizing strategic decisions based on these insights.

Moreover, treating respondents’ time and privacy with respect also bolsters the relationship with the customers, fostering trust and willingness to participate in future research. This proactive respect can facilitate a more accurate understanding of customer preferences and satisfaction, leading to more effective marketing strategies.

In conclusion, avoiding nonresponse bias isn’t just a methodological concern — it’s a matter of effectively understanding your customers and making informed decisions that drive success in your marketing efforts.